Ivan Čilić / December 5, 2025

As the Internet of Things (IoT) evolves, billions of devices continuously generate data that must be processed quickly and reliably. Traditionally, this data was sent to the cloud for computation—but forwarding everything to remote data centers introduces bandwidth pressure and unacceptable delays, especially for real-time applications such as autonomous driving, industrial automation, or smart healthcare.

To address these limitations, modern architectures are shifting towards the Computing Continuum (CC)—a layered ecosystem where computation happens “as close as possible” to where data is produced. But this flexibility introduces significant complexity: how do we ensure that AIoT services in the CC continue to meet strict Quality of Service (QoS) requirements while nodes, workloads, and network conditions constantly fluctuate?

One of the important pieces of this puzzle is load balancing. And not just any load balancing—QoS-aware, decentralized, adaptive load balancing designed for the heterogeneous computing continuum.

In this post, we explore why traditional cloud-centric load balancing falls short at the edge, and how an approach like QEdgeProxy can deliver the responsiveness and reliability required in the CC.

A Motivating Example: Autonomous Driving on the Edge

Consider a real-world edge-AI workload: PilotNet, NVIDIA’s convolutional neural network (CNN) for end-to-end steering prediction. A car equipped with cameras continuously sends images as requests to multiple PilotNet services running in the CC to receive steering angle predictions as service replies. This is the inference loop of our CNN which in addition to pure CNN inference time incorporates the request-reply transmission (service response time or latency). For example, we can require that each prediction must be returned within 80 ms, otherwise the vehicle’s control loop may react too slowly, potentially causing unsafe driving behavior.

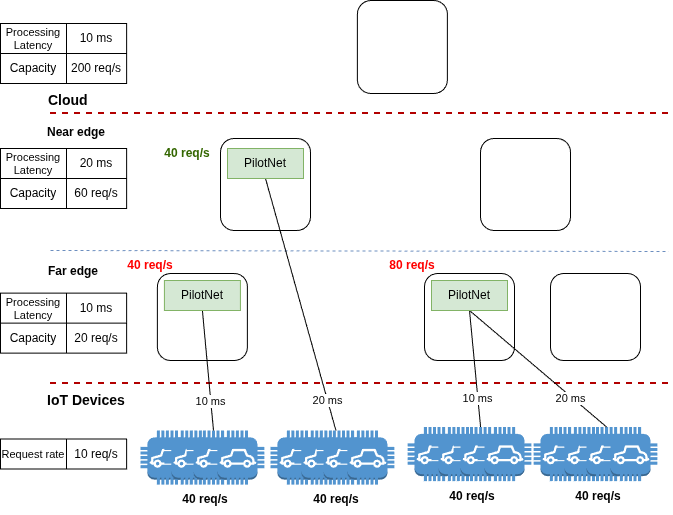

Figure 1. Motivating example illustration.

In a CC deployment illustrated in Figure 1, we can observe multiple cars sending requests to PilotNet services deployed at the following nodes:

- Far-edge devices (e.g., NVIDIA Orin Nano) which can perform inference in ~30 ms,

- Near-edge servers (e.g., AGX Orin) which reduce the processing time to ~20 ms, and

- Cloud GPUs (e.g., NVIDIA A100) which are the fastest in terms of processing (~10 ms) but introduce long network delays—40–60 ms latency.

With network delays included, the "fastest" computing resource might still violate the end-to-end QoS requirement. And since many vehicles may share the same far-edge hardware, as shown in Figure 1, overload becomes a real threat: once a node receives more requests than it can process, response times spike and QoS is broken.

This example illustrates three fundamental challenges of the CC:

- Placement: Where should service instances be deployed so that clients can reach at least one instance that can meet their QoS requirements—and without overloading the hardware?

- Routing: Once instances are deployed, how should client requests be distributed across them to maintain QoS satisfaction under changing workloads?

- Dynamicity: CC nodes may fail, bandwidth fluctuates, and clients move. How do we keep the placement and routing strategies up-to-date in real time?

These challenges highlight why CC workloads require not only smart orchestration, but also intelligent, decentralized routing.

Why Traditional Load Balancing Doesn’t Work at the Edge

In cloud environments, global controllers (like Kubernetes schedulers or service meshes) often make routing decisions based on a consolidated view of cluster state.

At the edge, this model breaks down as:

- No single node has complete or up-to-date global knowledge.

- Network conditions vary significantly between locations.

- Workloads shift unpredictably (e.g., IoT devices entering or leaving a zone).

- Edge devices are resource-constrained or with unstable connection.

Thus, routing must be localized, adaptive, and able to operate independently at each node. This is where decentralized load balancing becomes essential.

Introducing QEdgeProxy: Decentralized QoS-aware Load Balancer for the CC

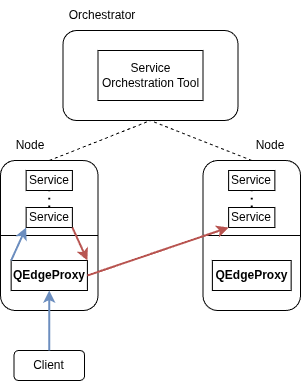

To enable adaptive routing without overburdening IoT devices or relying on global coordination, we propose QEdgeProxy [1]: a lightweight, decentralized load balancer deployed on every node of the continuum, as shown in Figure 2.

Figure 2. QEdgeProxy in the CC.

What does QEdgeProxy do?

- Maintains a QoS pool

It continuously learns which service instances are likely to meet the QoS targets (e.g., <80 ms latency). - Routes requests adaptively

When a client sends a request, the proxy selects an instance from its QoS pool based on recent QoS measurements and balancing strategies. - Handles dynamic system changes

If nodes fail, new instances appear, or network conditions shift, the QoS pool updates automatically. - Operates without global coordination

Each proxy acts independently, relying only on local observations and occasional updates from the orchestrator.

This decentralized approach ensures resilience and scalability in environments where centralized solutions would be too slow or too fragile.

How the QoS Pool Works

A QoS pool is a dynamically maintained set of service instances that appear capable—based on observed data—to fulfill the QoS requirements.

To build and maintain this set, QEdgeProxy reacts to four types of events:

- First request arrival

The proxy initializes a QoS pool using environmental estimates or historical data. - Instance lifecycle events

When an orchestrator creates or removes service instances, the proxy updates its pool. - New QoS measurements

After each request, the proxy updates its belief about the instance’s performance. - Environmental changes

Large shifts in network latency or node load trigger reevaluation.

Over time, the proxy converges toward accurate routing behavior—selecting instances that reliably meet the client’s QoS requirements.

Various techniques can be utilized for predicting QoS based on recent QoS measurements and interactions with the node running the instance, such as Kernel Density Estimation (KDE), Long Short-Term Memory (LSTM) networks, reinforcement learning, and other statistical or machine learning methods. These techniques will be examined further in our upcoming work—stay tuned!

QEdgeProxy in Practice: Implementation within Kubernetes

QEdgeProxy is implemented as a lightweight external component running on Kubernetes. Since built-in Kubernetes mechanisms (like NodePort, NetworkPolicy locality settings, or service meshes such as Istio) do not support adaptive, QoS-driven routing, we deploy QEdgeProxy as a DaemonSet so that every node in the cluster runs its own proxy instance.

Each proxy is a small Golang HTTP server that connects to the Kubernetes API to discover service instances, monitor node and pod events, and react to changes in the environment. When a client sends a request, by specifying the service name in the HTTP request header, QEdgeProxy selects an appropriate instance from its QoS pool and forwards the request directly to the target pod.

The full implementation is open source:

👉 https://github.com/AIoTwin/qedgeproxy

Why QoS-aware Load Balancing Matters

QoS-aware routing is crucial because simply choosing the closest or fastest instance is not enough in the CC. Considerations include:

- Latency budgets

Some nodes might be fast but too far away to meet the end-to-end deadline. - Capacity constraints

A perfectly located far-edge node may become overloaded if too many clients use it. - Workload dynamics

As demand fluctuates, the routing must adapt autonomously without human intervention.

A QoS pool maintained by QEdgeProxy allows the system to reason about these factors probabilistically, providing a soft guarantee that each request goes to an instance likely to meet its QoS requirements.

Toward Fully QoS-aware Systems for CC

CC is becoming the backbone of real-time, data-driven IoT systems. But to unlock its full potential, we need mechanisms that:

- Understand and enforce QoS.

- Adapt routing dynamically.

- Operate robustly and autonomously in heterogeneous, distributed environments.

- Avoid burdening IoT devices with complex decision-making.

Decentralized QoS-aware load balancing, as embodied by QEdgeProxy, is a key enabler of such intelligent, efficient, and resilient edge systems.

As future IoT workloads demand even tighter QoS guarantees, and as edge deployments become more dynamic, approaches like these will be critical to ensuring consistent, reliable service delivery across the entire CC.

References

[1] I. Čilić, V. Jukanović, I. P. Žarko, P. Frangoudis and S. Dustdar, "QEdgeProxy: QoS-Aware Load Balancing for IoT Services in the Computing Continuum," 2024 IEEE International Conference on Edge Computing and Communications (EDGE), Shenzhen, China, 2024